Learned Metric Index in SISAP 2023 Indexing Challenge

Table of Contents

The organizers of the SISAP 2023 announced an Indexing Challenge, opening the door for the Learned Metric Index (LMI) to test its performance on a large dataset and get feedback about its strengths and weaknesses.

Challenge #

The challenge consisted of three tasks. The first was to build an index on a subset of the LAION5B dataset1. The dataset contains 5 billion images, each represented by a 768-dimensional CLIP embedding. The goal was to build an index that could answer 10,000 nearest-neighbor queries with a recall above 90% in the shortest possible time. The organizers also provided PCA projections with 32 and 96 dimensions.

The second task was to produce high-quality binary sketches. These can be considered as the hashes of the original vectors. They were then used in a brute-force search to find the nearest neighbors. The goal was to achieve the highest recall possible. The final task consisted of building an index over the binary sketches.

The team behind the LMI has decided to participate in the first task by indexing 10M deep features represented by 768-dimensional 16-bit floating-point vectors with dot product measure. An architecture with a single multi-layer perceptron (root node) indexed the dataset. The data was partitioned into 122 buckets (leaf nodes). The buckets were searched sequentially based on the probability predicted by the model, with the stop condition set at four buckets.

Results #

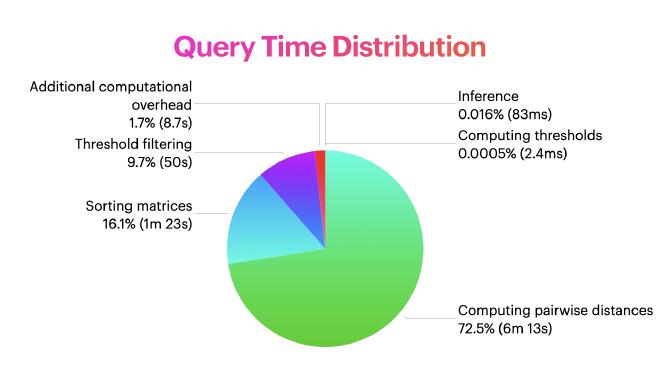

The result revealed a bottleneck in the current implementation of the LMI. The query time distribution suggests that many distance computations were performed while visiting the buckets. The LMI was designed to eliminate distance computations when traversing the index, especially applicable to situations with computationally intensive distance functions. However, the implementation does not extend this advantage to the bucket search. There is no object filtering, and no smaller set of candidates is considered. All distances between the objects and queries are evaluated.

On the positive side, the model’s inference time is relatively short, making an addition of a layer of internal models possible. The optimal number of buckets should be further investigated, as more buckets with fewer objects could benefit the search time. Also, implementing object filtering in buckets should significantly impact the search speed. Finally, parallelizing operations called during the search should be considered.

Conclusion #

The challenge provided a unique opportunity to compare the LMI with other methods and to obtain valuable feedback. The results suggest that the LMI is not yet competitive with other highly optimized solutions. Nevertheless, the results are encouraging, and the query time distribution indicates where the future development efforts of the LMI should be focused.

The code used in the challenge is available at github.com/TerkaSlan/sisap23-laion-challenge-learned-index.

Schuhmann, Christoph, et al. “Laion-5b: An open large-scale dataset for training next generation image-text models.” Advances in Neural Information Processing Systems 35 (2022): 25278-25294. ↩︎